Archive for the ‘Algorithms’ Category.

Oct 21st, 2007| 03:59 pm | Posted by vlk

wavdetect is a wavelet-based source detection algorithm that is in wide use in X-ray data analysis, in particular to find sources in Chandra images. It came out of the Chicago “Beta Site” of the AXAF Science Center (what CXC used to be called before launch). Despite the fancy name, and the complicated mathematics and the devilish details, it is really not much more than a generalization of earlier local cell detect, where a local background is estimated around a putative source and the question is asked, is whatever signal that is being seen in this pixel significantly higher than expected? However, unlike previous methods that used a flux measurement as the criterion for detection (e.g., using signal-to-noise ratios as proxy for significance threshold), it tests the hypothesis that the observed signal can be obtained as a fluctuation from the background. Continue reading ‘The power of wavdetect’ »

Tags:

AXAF,

ChaMP,

Chandra,

ciao,

Power,

source detection,

Type II error,

wavdetect,

wavelet Category:

Algorithms,

Imaging,

X-ray |

1 Comment

Oct 5th, 2007| 04:47 pm | Posted by hlee

Not knowing much about java and java applets in a software development and its web/internet publicizing, I cannot comment what is more efficient. Nevertheless, I thought that PHP would do the similar job in a simpler fashion and the followings may provide some ideas and solutions for publicizing statistical methods through websites based on Bayesian Inference.

Continue reading ‘Implement Bayesian inference using PHP’ »

Tags:

Bayesian Inference,

Classification,

Condition Probability,

Estimation,

IBM,

JAVA,

Open Source,

PHP Category:

Algorithms,

Bayesian,

Cross-Cultural,

Data Processing,

Languages |

Comment

Oct 3rd, 2007| 06:41 pm | Posted by aneta

I am visiting Copernicus Astronomical Center in Warsaw this week and this is the reason for Polish connection! I learned about two papers that might interest our group. They are authored by Alex Schwarzenberg-Czerny

1. Accuracy of period determination, (1991 MNRAS.253, 198)

Periods of oscillation are frequently found using one of two methods: least-squares (LSQ) fit or power spectrum. Their errors are estimated using the LSQ correlation matrix or the Rayleigh resolution criterion, respectively. In this paper, it is demonstrated that both estimates are statistically incorrect. On the one hand, the LSQ covariance matrix does not account for correlation of residuals from the fit. Neglect of the correlations may cause large underestimation of the variance. On the other hand, the Rayleigh resolution criterion is insensitive to signal-to-noise ratio and thus does not reflect quality of observations. The correct variance estimates are derived for the two methods.

Continue reading ‘Polish AstroStatistics’ »

Sep 17th, 2007| 03:36 pm | Posted by hlee

VOConvert or ConVOT is a small java script which does file format conversion from fits to ascii or the other way around. These tools might be useful for statisticians who want to convert astronomers’ data format called fits into ascii quickly for a statistical analysis. Additionally, VOConvert creates an interim output for VOStat, designed for statistical data analysis from Virtual Observatory. The softwares and the list of Virtual Observatories around the world can be found at Virtual Observatory India. Please, check a link in VOstat (http://hea-www.harvard.edu/AstroStat/slog/2007/vostat) for more information about VOstat.

Sep 14th, 2007| 08:46 pm | Posted by hlee

Sep 12th, 2007| 04:31 pm | Posted by hlee

From arxiv/astro-ph:0709.1359,

A robust morphological classification of high-redshift galaxies using support vector machines on seeing limited images. I Method description by M. Huertas-Company et al.

Machine learning and statistical learning become more and more popular in astronomy. Artificial Neural Network (ANN) and Support Vector Machine (SVM) are hardly missed when classifying on massive survey data is the objective. The authors provide a gentle tutorial on SVM for galactic morphological classification. Their source code GALSVM is linked for the interested readers.

Continue reading ‘[ArXiv] SVM and galaxy morphological classification, Sept. 10, 2007’ »

Sep 4th, 2007| 10:55 pm | Posted by hlee

From arxiv/astro-ph:0708.4274v1

Comparison of decision tree methods for finding active objects by Y. Zhao and Y. Zhang

The authors (astronomers) introduced and summarized various decision three methods (REPTree, Random Tree, Decision Stump, Random Forest, J48, NBTree, and AdTree) to the astronomical community.

Continue reading ‘[ArXiv] Decision Tree, Aug. 31, 2007’ »

Aug 19th, 2007| 11:35 pm | Posted by hlee

One of the most frequently cited papers in model selection would be An Asymptotic Equivalence of Choice of Model by Cross-Validation and Akaike’s Criterion by M. Stone, Journal of the Royal Statistical Society. Series B (Methodological), Vol. 39, No. 1 (1977), pp. 44-47.

(Akaike’s 1974 paper, introducing Akaike Information Criterion (AIC), is the most often cited paper in the subject of model selection).

Continue reading ‘Cross-validation for model selection’ »

Tags:

AIC,

Cash statistics,

cross-validation,

exponential family,

Fisher information,

maximum likelihood,

Model Selection,

resampling,

score,

TIC Category:

Algorithms,

arXiv,

Frequentist,

Methods,

Stat |

5 Comments

Aug 14th, 2007| 10:17 pm | Posted by hlee

During the International X-ray Summer School, as a project presentation, I tried to explain the inadequate practice of χ^2 statistics in astronomy. If your best fit is biased (any misidentification of a model easily causes such bias), do not use χ^2 statistics to get 1σ error for the 68% chance of capturing the true parameter.

Later, I decided to do further investigation on that subject and this paper came along: Astrostatistics: Goodness-of-Fit and All That! by Babu and Feigelson.

Continue reading ‘Astrostatistics: Goodness-of-Fit and All That!’ »

Tags:

Anderson-Darling,

Babu,

best-fit,

bias,

bootstrap,

chi-square,

Cramer-von Mises,

Feigelson,

Kolmogorov-Smirnoff,

Kullback-Leibler distance,

nonparametric,

parametric,

resampling Category:

Algorithms,

arXiv,

Astro,

Fitting,

High-Energy,

Methods,

Spectral,

Stat |

7 Comments

Aug 8th, 2007| 05:14 pm | Posted by hlee

X-ray summer school is on going. Numerous interesting topics were presented but not much about statistics (Only advice so far, “use implemented statistics in x-ray data reduction/analysis tools” and “it’s just a tool”). Nevertheless, I happened to talk two students extensively on their research topics, finding features from light curves. One was very empirical from comparing gamma ray burst trigger time to 24kHz observations and the other was statistical and algorithmic by using Bayesian Block. Sadly, I could not give them answers but the latter one dragged my attention.

Continue reading ‘Change Point Problem’ »

Tags:

ARCH,

Bayesian Block,

challenges,

change point problem,

GARCH,

light curves,

summer school,

X-ray Category:

Algorithms,

Bayesian,

Cross-Cultural,

Data Processing,

gamma-ray,

High-Energy,

Stat,

Timing,

X-ray |

4 Comments

Jul 25th, 2007| 01:46 pm | Posted by hlee

From arxiv/astro-ph:0707.3413

The Sixth Data Release of the Sloan Digital Sky Survey by … many people …

The sixth data release of the Sloan Digital Sky Survey (SDSS DR6) is available at http://www.sdss.org/dr6. Additionally, Catalog Archive Service (CAS) and

SQL interface to access the catalog would be useful to data searching statisticians. Simple SQL commends, which are well documented, could narrow down the size of data and the spatial coverage.

Continue reading ‘[ArXiv] SDSS DR6, July 23, 2007’ »

Tags:

catalog,

convex hull peeling,

density estimation,

DR6,

massive data,

multivariate analysis,

nonparametric,

SDSS,

SQL,

voronoi tessellation Category:

Algorithms,

arXiv,

Astro,

Data Processing,

Misc,

Optical |

1 Comment

Jul 25th, 2007| 02:28 am | Posted by hlee

Since I began to subscribe arxiv/astro-ph abstracts, from an astrostatistical point of view, one of the most frequent topics has been photometric redshifts. This photometric redshift has been a popular topic as the catalog of remote photometric object observation multiplies its volume and sky survey projects in multiple bands lead to virtual observatories (VO – will discuss in the later posting). Just searching by photometric redshifts in google scholar and arxiv.org provides more than 2000 articles since 2000.

Continue reading ‘Photometric Redshifts’ »

Tags:

cosmology,

distance estimation,

Lutz-Kelker bias,

machine learning,

Malmquist bias,

Photometric Redshift,

spectrum,

survey,

VO Category:

Algorithms,

arXiv,

Data Processing,

Galaxies,

Stat |

Comment

Jul 13th, 2007| 07:24 pm | Posted by hlee

From arxiv/astro-ph: 0707.1611 Probabilistic Cross-Identification of Astronomical Sources by Budavari and Szalay

As multi-wave length studies become more popular, various source matching methodologies have been discussed. One of such methods particularly focused on Bayesian idea was introduced by Budavari and Szalay with a demand for symmetric algorithms in a unified framework.

Continue reading ‘[ArXiv] Matching Sources, July 11, 2007’ »

Tags:

Bayes factor,

evidence,

Matching,

multi-wavelength,

Multiple Testing Category:

Algorithms,

arXiv,

Bayesian,

Data Processing,

Frequentist,

Objects,

Quotes,

Uncertainty |

1 Comment

Jul 5th, 2007| 04:13 pm | Posted by aconnors

Jeff Scargle (in person [top] and in wavelet transform [bottom], left) weighs in on our continuing discussion on how well “automated fitting”/”Machine Learning” can really work (private communication, June 28, 2007):

Jeff Scargle (in person [top] and in wavelet transform [bottom], left) weighs in on our continuing discussion on how well “automated fitting”/”Machine Learning” can really work (private communication, June 28, 2007):

It is clearly wrong to say that automated fitting of models to data is impossible. Such a view ignores progress made in the area of machine learning and data mining. Of course there can be problems, I believe mostly connected with two related issues:

* Models that are too fragile (that is, easily broken by unusual data)

* Unusual data (that is, data that lie in some sense outside the arena that one expects)

The antidotes are:

(1) careful study of model sensitivity

(2) if the context warrants, preprocessing to remove “bad” points

(3) lots and lots of trial and error experiments, with both data sets that are as realistic as possible and ones that have extremes (outliers, large errors, errors with unusual properties, etc.)

Trial … error … fix error … retry …

You can quote me on that.

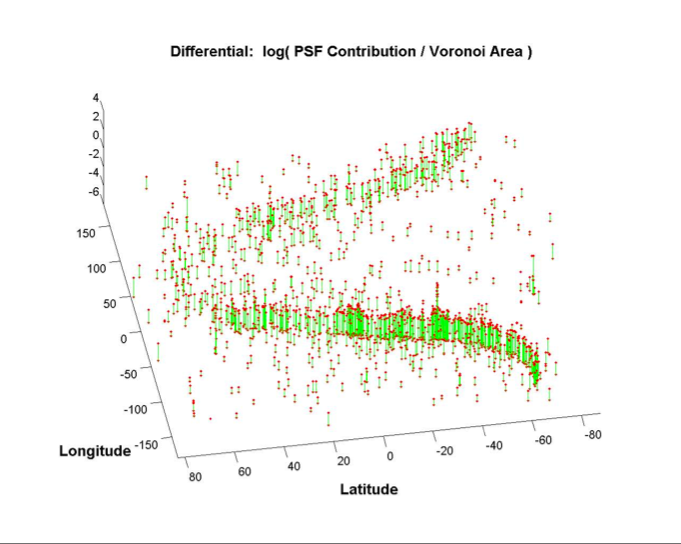

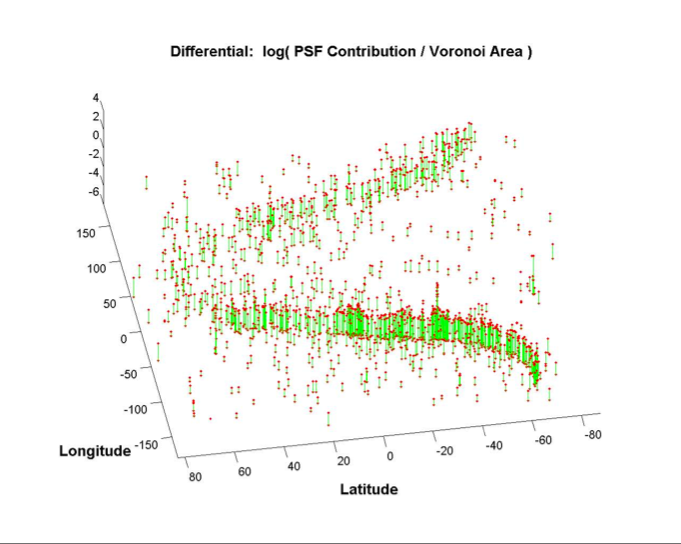

This ilustration is from Jeff Scargle’s First GLAST Symposium (June 2007) talk, pg 14, demonstrating the use of inverse area of Voroni tesselations, weighted by the PSF density, as an automated measure of the density of Poisson Gamma-Ray counts on the sky.

Category:

Algorithms,

Astro,

Data Processing,

gamma-ray,

High-Energy,

Imaging,

Methods,

Quotes,

Stat,

Timing,

X-ray |

1 Comment

Jun 20th, 2007| 06:29 pm | Posted by aconnors

These quotes are in the opposite spirit of the last two Bayesian quotes.

They are from the excellent “R”-based , Tutorial on Non-Parametrics given by

Chad Schafer and Larry Wassserman at the 2006 SAMSI Special Semester on AstroStatistics (or here ).

Chad and Larry were explaining trees:

For more sophistcated tree-searches, you might try Robert Nowak [and his former student, Becca Willett --- especially her "software" pages]. There is even Bayesian CART — Classifcation And Regression Trees. These can take 8 or 9 hours to “do it right”, via MCMC. BUT [these results] tend to be very close to [less rigorous] methods that take only minutes.

Trees are used primarily by doctors, for patients: it is much easier to follow a tree than a kernel estimator, in person.

Trees are much more ad-hoc than other methods we talked about, BUT they are very user friendly, very flexible.

In machine learning, which is only statistics done by computer scientists, they love trees.